UnityのHDRPを改造し擬似的な流体のレンダリングを行うパスを追加する

はじめに

HDRPのコードを読んで、カスタムしてみたくなったのでカスタムしてみた記事です。 HDRPのソースコードを編集してカスタムパスを追加し擬似的な流体のレンダリングを実装します。

将来的にはきちんとカスタムパスを追加する手段の用意されそうな気配がするので、 そうなったらこの記事の情報はヤクタタズになりそうですね。

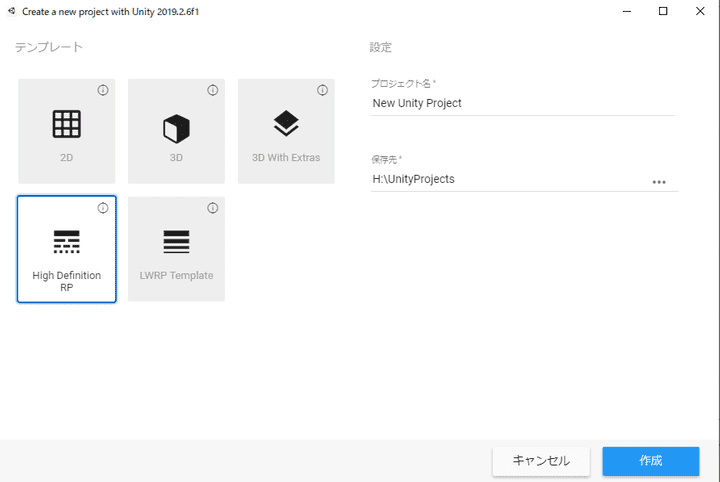

- Unity: 2019.2.6f1

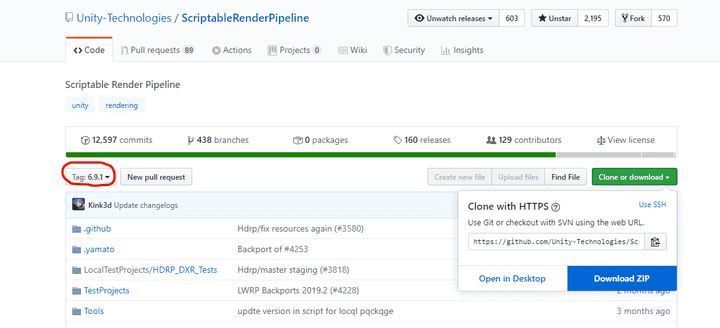

- HDRP: 6.9.1

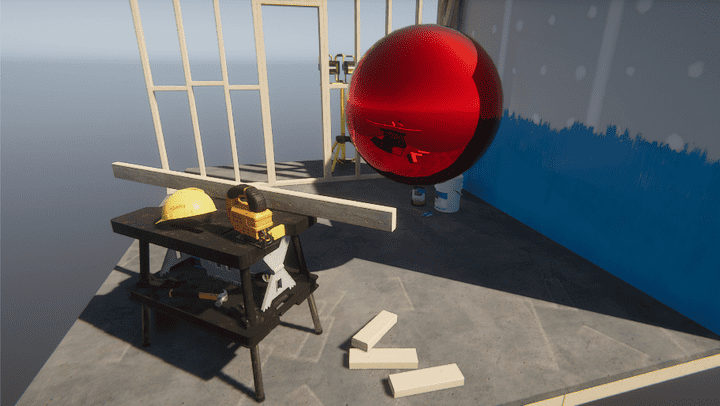

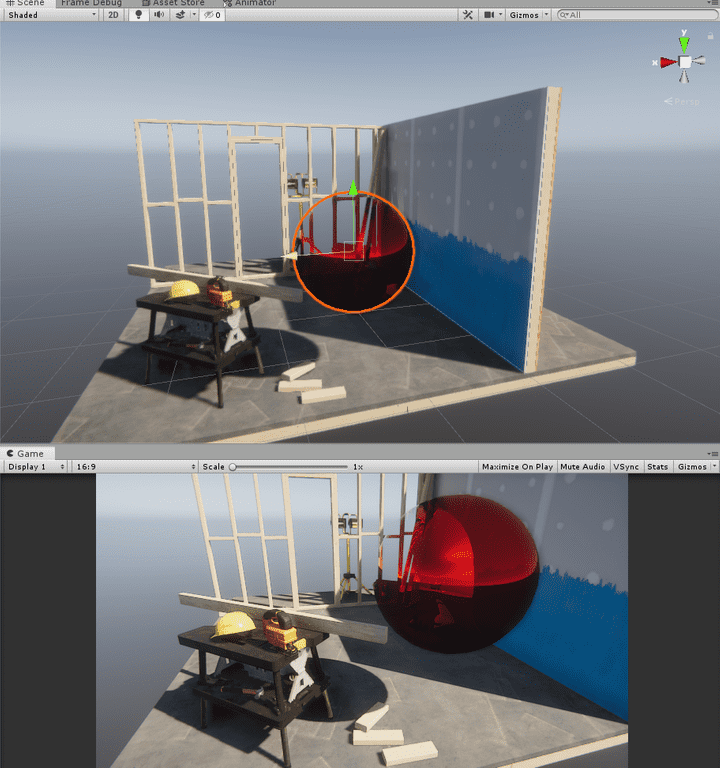

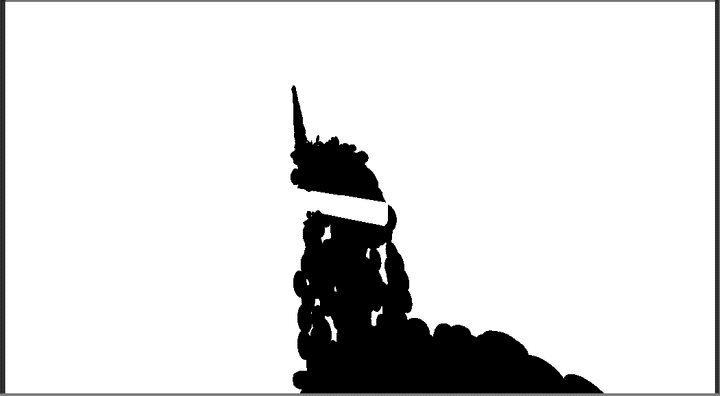

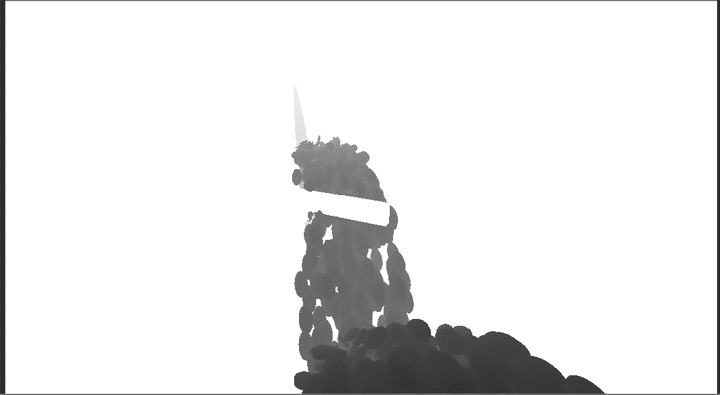

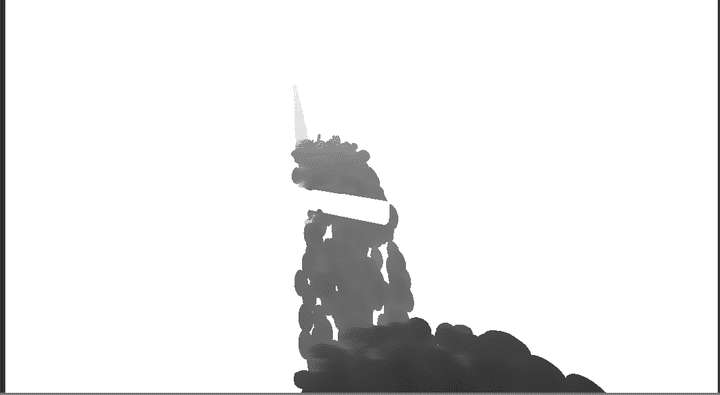

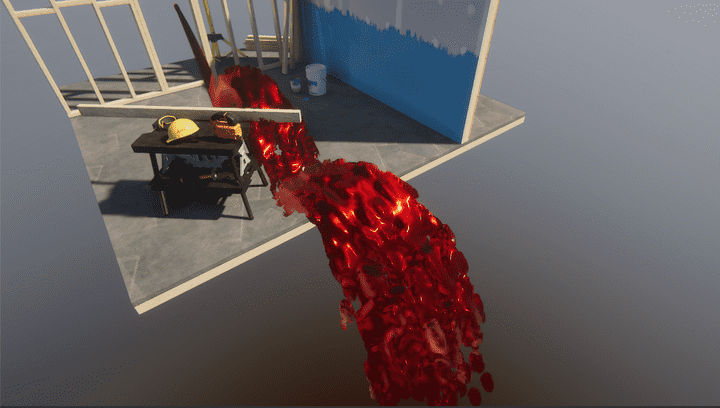

やったー。HDRPを改造してカスタムパスを追加してパーティクルを流体っぽくレンダリングするの出来たー。 #Unity #HDRP pic.twitter.com/djYG5mEkeS

— 折登 いつき (@MatchaChoco010) September 23, 2019

HDRPのコードをざっと読んで見る

今回はUnity 2019.2.6f1で動くv6.9.1をベースにしていきます。 利用するUnityのバージョンに対応したHDRPが必要なので注意が必要です。

HDRPのレンダリングの本体はRuntime/RenderPipeline/HDRenderPipeline.csです。

907行目からのRenderメソッドがレンダリングしているSRPのコアのメソッドです。

protected override void Render(ScriptableRenderContext renderContext, Camera[] cameras)

{

if (!m_ValidAPI || cameras.Length == 0)

return;

...といってもRenderメソッドの最初の方は各種設定やカリングなどで占められています。 依存するライトプローブを先にレンダリングしたりとかでややこしいのですが、 1452行目でExecuteRenderRequestというメソッドを呼び出しています。 このメソッドがレンダリングパイプラインの本体になります。

using (new ProfilingSample(

cmd,

$"HDRenderPipeline::Render {renderRequest.hdCamera.camera.name}",

CustomSamplerId.HDRenderPipelineRender.GetSampler())

)

{

cmd.SetInvertCulling(renderRequest.cameraSettings.invertFaceCulling);

UnityEngine.Rendering.RenderPipeline.BeginCameraRendering(renderContext, renderRequest.hdCamera.camera);

ExecuteRenderRequest(renderRequest, renderContext, cmd, AOVRequestData.@default);

cmd.SetInvertCulling(false);

UnityEngine.Rendering.RenderPipeline.EndCameraRendering(renderContext, renderRequest.hdCamera.camera);

}ExecuteRenderRequestは1491行目から2028行目まで続きます。 ExecuteRenderRequestをちょっと長いですが全部貼り付けてみるとこんな感じ。

void ExecuteRenderRequest(

RenderRequest renderRequest,

ScriptableRenderContext renderContext,

CommandBuffer cmd,

AOVRequestData aovRequest

)

{

InitializeGlobalResources(renderContext);

var hdCamera = renderRequest.hdCamera;

var camera = hdCamera.camera;

var cullingResults = renderRequest.cullingResults.cullingResults;

var hdProbeCullingResults = renderRequest.cullingResults.hdProbeCullingResults;

var decalCullingResults = renderRequest.cullingResults.decalCullResults;

var target = renderRequest.target;

// Updates RTHandle

hdCamera.BeginRender();

using (ListPool<RTHandle>.Get(out var aovBuffers))

{

aovRequest.AllocateTargetTexturesIfRequired(ref aovBuffers);

// If we render a reflection view or a preview we should not display any debug information

// This need to be call before ApplyDebugDisplaySettings()

if (camera.cameraType == CameraType.Reflection || camera.cameraType == CameraType.Preview)

{

// Neutral allow to disable all debug settings

m_CurrentDebugDisplaySettings = s_NeutralDebugDisplaySettings;

}

else

{

// Make sure we are in sync with the debug menu for the msaa count

m_MSAASamples = m_DebugDisplaySettings.data.msaaSamples;

m_SharedRTManager.SetNumMSAASamples(m_MSAASamples);

m_DebugDisplaySettings.UpdateCameraFreezeOptions();

m_CurrentDebugDisplaySettings = m_DebugDisplaySettings;

bool sceneLightingIsDisabled = CoreUtils.IsSceneLightingDisabled(hdCamera.camera);

if (m_CurrentDebugDisplaySettings.GetDebugLightingMode() != DebugLightingMode.MatcapView)

{

if(sceneLightingIsDisabled)

{

m_CurrentDebugDisplaySettings.SetDebugLightingMode(DebugLightingMode.MatcapView);

}

}

if(hdCamera.sceneLightingWasDisabledForCamera && !CoreUtils.IsSceneLightingDisabled(hdCamera.camera))

{

m_CurrentDebugDisplaySettings.SetDebugLightingMode(DebugLightingMode.None);

}

hdCamera.sceneLightingWasDisabledForCamera = sceneLightingIsDisabled;

}

aovRequest.SetupDebugData(ref m_CurrentDebugDisplaySettings);

#if ENABLE_RAYTRACING

// Must update after getting DebugDisplaySettings

m_RayTracingManager.rayCountManager.ClearRayCount(cmd, hdCamera);

#endif

m_DbufferManager.enableDecals = false;

if (hdCamera.frameSettings.IsEnabled(FrameSettingsField.Decals))

{

using (new ProfilingSample(null, "DBufferPrepareDrawData", CustomSamplerId.DBufferPrepareDrawData.GetSampler()))

{

// TODO: update singleton with DecalCullResults

m_DbufferManager.enableDecals = true; // mesh decals are renderers managed by c++ runtime and we have no way to query if any are visible, so set to true

DecalSystem.instance.LoadCullResults(decalCullingResults);

DecalSystem.instance.UpdateCachedMaterialData(); // textures, alpha or fade distances could've changed

DecalSystem.instance.CreateDrawData(); // prepare data is separate from draw

DecalSystem.instance.UpdateTextureAtlas(cmd); // as this is only used for transparent pass, would've been nice not to have to do this if no transparent renderers are visible, needs to happen after CreateDrawData

}

}

using (new ProfilingSample(cmd, "Volume Update", CustomSamplerId.VolumeUpdate.GetSampler()))

{

VolumeManager.instance.Update(hdCamera.volumeAnchor, hdCamera.volumeLayerMask);

}

// Do anything we need to do upon a new frame.

// The NewFrame must be after the VolumeManager update and before Resize because it uses properties set in NewFrame

LightLoopNewFrame(hdCamera.frameSettings);

// Apparently scissor states can leak from editor code. As it is not used currently in HDRP (appart from VR). We disable scissor at the beginning of the frame.

cmd.DisableScissorRect();

Resize(hdCamera);

m_PostProcessSystem.BeginFrame(cmd, hdCamera);

ApplyDebugDisplaySettings(hdCamera, cmd);

m_SkyManager.UpdateCurrentSkySettings(hdCamera);

SetupCameraProperties(hdCamera, renderContext, cmd);

PushGlobalParams(hdCamera, cmd);

// TODO: Find a correct place to bind these material textures

// We have to bind the material specific global parameters in this mode

m_MaterialList.ForEach(material => material.Bind(cmd));

// Frustum cull density volumes on the CPU. Can be performed as soon as the camera is set up.

DensityVolumeList densityVolumes = PrepareVisibleDensityVolumeList(hdCamera, cmd, m_Time);

// Note: Legacy Unity behave like this for ShadowMask

// When you select ShadowMask in Lighting panel it recompile shaders on the fly with the SHADOW_MASK keyword.

// However there is no C# function that we can query to know what mode have been select in Lighting Panel and it will be wrong anyway. Lighting Panel setup what will be the next bake mode. But until light is bake, it is wrong.

// Currently to know if you need shadow mask you need to go through all visible lights (of CullResult), check the LightBakingOutput struct and look at lightmapBakeType/mixedLightingMode. If one light have shadow mask bake mode, then you need shadow mask features (i.e extra Gbuffer).

// It mean that when we build a standalone player, if we detect a light with bake shadow mask, we generate all shader variant (with and without shadow mask) and at runtime, when a bake shadow mask light is visible, we dynamically allocate an extra GBuffer and switch the shader.

// So the first thing to do is to go through all the light: PrepareLightsForGPU

bool enableBakeShadowMask;

using (new ProfilingSample(cmd, "TP_PrepareLightsForGPU", CustomSamplerId.TPPrepareLightsForGPU.GetSampler()))

{

enableBakeShadowMask = PrepareLightsForGPU(cmd, hdCamera, cullingResults, hdProbeCullingResults, densityVolumes, m_CurrentDebugDisplaySettings, aovRequest);

}

// Configure all the keywords

ConfigureKeywords(enableBakeShadowMask, hdCamera, cmd);

hdCamera.xr.StartLegacyStereo(camera, cmd, renderContext);

ClearBuffers(hdCamera, cmd);

bool shouldRenderMotionVectorAfterGBuffer = RenderDepthPrepass(cullingResults, hdCamera, renderContext, cmd);

if (!shouldRenderMotionVectorAfterGBuffer)

{

// If objects motion vectors if enabled, this will render the objects with motion vector into the target buffers (in addition to the depth)

// Note: An object with motion vector must not be render in the prepass otherwise we can have motion vector write that should have been rejected

RenderObjectsMotionVectors(cullingResults, hdCamera, renderContext, cmd);

}

// Now that all depths have been rendered, resolve the depth buffer

m_SharedRTManager.ResolveSharedRT(cmd, hdCamera);

// This will bind the depth buffer if needed for DBuffer)

RenderDBuffer(hdCamera, cmd, renderContext, cullingResults);

// We can call DBufferNormalPatch after RenderDBuffer as it only affect forward material and isn't affected by RenderGBuffer

// This reduce lifteime of stencil bit

DBufferNormalPatch(hdCamera, cmd, renderContext, cullingResults);

#if ENABLE_RAYTRACING

bool validIndirectDiffuse = m_RaytracingIndirectDiffuse.ValidIndirectDiffuseState();

cmd.SetGlobalInt(HDShaderIDs._RaytracedIndirectDiffuse, validIndirectDiffuse ? 1 : 0);

#endif

RenderGBuffer(cullingResults, hdCamera, renderContext, cmd);

// We can now bind the normal buffer to be use by any effect

m_SharedRTManager.BindNormalBuffer(cmd);

// In both forward and deferred, everything opaque should have been rendered at this point so we can safely copy the depth buffer for later processing.

GenerateDepthPyramid(hdCamera, cmd, FullScreenDebugMode.DepthPyramid);

// Depth texture is now ready, bind it (Depth buffer could have been bind before if DBuffer is enable)

cmd.SetGlobalTexture(HDShaderIDs._CameraDepthTexture, m_SharedRTManager.GetDepthTexture());

if (shouldRenderMotionVectorAfterGBuffer)

{

// See the call RenderObjectsMotionVectors() above and comment

RenderObjectsMotionVectors(cullingResults, hdCamera, renderContext, cmd);

}

RenderCameraMotionVectors(cullingResults, hdCamera, renderContext, cmd);

hdCamera.xr.StopLegacyStereo(camera, cmd, renderContext);

// Caution: We require sun light here as some skies use the sun light to render, it means that UpdateSkyEnvironment must be called after PrepareLightsForGPU.

// TODO: Try to arrange code so we can trigger this call earlier and use async compute here to run sky convolution during other passes (once we move convolution shader to compute).

if(m_CurrentDebugDisplaySettings.GetDebugLightingMode() != DebugLightingMode.MatcapView)

UpdateSkyEnvironment(hdCamera, cmd);

hdCamera.xr.StartLegacyStereo(camera, cmd, renderContext);

#if ENABLE_RAYTRACING

bool raytracedIndirectDiffuse = m_RaytracingIndirectDiffuse.RenderIndirectDiffuse(hdCamera, cmd, renderContext, m_FrameCount);

if(raytracedIndirectDiffuse)

{

PushFullScreenDebugTexture(hdCamera, cmd, m_RaytracingIndirectDiffuse.GetIndirectDiffuseTexture(), FullScreenDebugMode.IndirectDiffuse);

}

#endif

#if UNITY_EDITOR

var showGizmos = camera.cameraType == CameraType.Game

|| camera.cameraType == CameraType.SceneView;

#endif

if (m_CurrentDebugDisplaySettings.IsDebugMaterialDisplayEnabled() || m_CurrentDebugDisplaySettings.IsMaterialValidationEnabled())

{

RenderDebugViewMaterial(cullingResults, hdCamera, renderContext, cmd);

}

else

{

if (!hdCamera.frameSettings.SSAORunsAsync())

m_AmbientOcclusionSystem.Render(cmd, hdCamera, m_SharedRTManager, renderContext, m_FrameCount);

CopyStencilBufferIfNeeded(cmd, hdCamera, m_SharedRTManager.GetDepthStencilBuffer(), m_SharedRTManager.GetStencilBufferCopy(), m_CopyStencil, m_CopyStencilForSSR);

// When debug is enabled we need to clear otherwise we may see non-shadows areas with stale values.

if (hdCamera.frameSettings.IsEnabled(FrameSettingsField.ContactShadows) && m_CurrentDebugDisplaySettings.data.fullScreenDebugMode == FullScreenDebugMode.ContactShadows)

{

HDUtils.SetRenderTarget(cmd, m_ContactShadowBuffer, ClearFlag.Color, Color.clear);

}

#if ENABLE_RAYTRACING

// Update the light clusters that we need to update

m_RayTracingManager.UpdateCameraData(cmd, hdCamera);

// We only request the light cluster if we are gonna use it for debug mode

if (FullScreenDebugMode.LightCluster == m_CurrentDebugDisplaySettings.data.fullScreenDebugMode)

{

var rSettings = VolumeManager.instance.stack.GetComponent<ScreenSpaceReflection>();

var rrSettings = VolumeManager.instance.stack.GetComponent<RecursiveRendering>();

HDRaytracingEnvironment rtEnv = m_RayTracingManager.CurrentEnvironment();

if (rSettings.enableRaytracing.value && rtEnv != null)

{

HDRaytracingLightCluster lightCluster = m_RayTracingManager.RequestLightCluster(rtEnv.reflLayerMask);

PushFullScreenDebugTexture(hdCamera, cmd, lightCluster.m_DebugLightClusterTexture, FullScreenDebugMode.LightCluster);

}

else if (rrSettings.enable.value && rtEnv != null)

{

HDRaytracingLightCluster lightCluster = m_RayTracingManager.RequestLightCluster(rtEnv.raytracedLayerMask);

PushFullScreenDebugTexture(hdCamera, cmd, lightCluster.m_DebugLightClusterTexture, FullScreenDebugMode.LightCluster);

}

}

#endif

hdCamera.xr.StopLegacyStereo(camera, cmd, renderContext);

var buildLightListTask = new HDGPUAsyncTask("Build light list", ComputeQueueType.Background);

// It is important that this task is in the same queue as the build light list due to dependency it has on it. If really need to move it, put an extra fence to make sure buildLightListTask has finished.

var volumeVoxelizationTask = new HDGPUAsyncTask("Volumetric voxelization", ComputeQueueType.Background);

var SSRTask = new HDGPUAsyncTask("Screen Space Reflection", ComputeQueueType.Background);

var SSAOTask = new HDGPUAsyncTask("SSAO", ComputeQueueType.Background);

var contactShadowsTask = new HDGPUAsyncTask("Screen Space Shadows", ComputeQueueType.Background);

var haveAsyncTaskWithShadows = false;

if (hdCamera.frameSettings.BuildLightListRunsAsync())

{

buildLightListTask.Start(cmd, renderContext, Callback, !haveAsyncTaskWithShadows);

haveAsyncTaskWithShadows = true;

void Callback(CommandBuffer asyncCmd)

=> BuildGPULightListsCommon(hdCamera, asyncCmd, m_SharedRTManager.GetDepthStencilBuffer(hdCamera.frameSettings.IsEnabled(FrameSettingsField.MSAA)), m_SharedRTManager.GetStencilBufferCopy());

}

if (hdCamera.frameSettings.VolumeVoxelizationRunsAsync())

{

volumeVoxelizationTask.Start(cmd, renderContext, Callback, !haveAsyncTaskWithShadows);

haveAsyncTaskWithShadows = true;

void Callback(CommandBuffer asyncCmd)

=> VolumeVoxelizationPass(hdCamera, asyncCmd, m_FrameCount, densityVolumes);

}

if (hdCamera.frameSettings.SSRRunsAsync())

{

SSRTask.Start(cmd, renderContext, Callback, !haveAsyncTaskWithShadows);

haveAsyncTaskWithShadows = true;

void Callback(CommandBuffer asyncCmd)

=> RenderSSR(hdCamera, asyncCmd, renderContext);

}

if (hdCamera.frameSettings.SSAORunsAsync())

{

void AsyncSSAODispatch(CommandBuffer asyncCmd) => m_AmbientOcclusionSystem.Dispatch(asyncCmd, hdCamera, m_SharedRTManager, m_FrameCount);

SSAOTask.Start(cmd, renderContext, AsyncSSAODispatch, !haveAsyncTaskWithShadows);

haveAsyncTaskWithShadows = true;

}

using (new ProfilingSample(cmd, "Render shadow maps", CustomSamplerId.RenderShadowMaps.GetSampler()))

{

// This call overwrites camera properties passed to the shader system.

RenderShadowMaps(renderContext, cmd, cullingResults, hdCamera);

hdCamera.SetupGlobalParams(cmd, m_Time, m_LastTime, m_FrameCount);

}

if (!hdCamera.frameSettings.SSRRunsAsync())

{

// Needs the depth pyramid and motion vectors, as well as the render of the previous frame.

RenderSSR(hdCamera, cmd, renderContext);

}

// Contact shadows needs the light loop so we do them after the build light list

if (hdCamera.frameSettings.BuildLightListRunsAsync())

{

buildLightListTask.EndWithPostWork(cmd, Callback);

void Callback()

{

var globalParams = PrepareLightLoopGlobalParameters(hdCamera);

PushLightLoopGlobalParams(globalParams, cmd);

// Run the contact shadow as they now need the light list

DispatchContactShadows();

}

}

else

{

using (new ProfilingSample(cmd, "Build Light list", CustomSamplerId.BuildLightList.GetSampler()))

{

BuildGPULightLists(hdCamera, cmd, m_SharedRTManager.GetDepthStencilBuffer(hdCamera.frameSettings.IsEnabled(FrameSettingsField.MSAA)), m_SharedRTManager.GetStencilBufferCopy());

}

DispatchContactShadows();

}

using (new ProfilingSample(cmd, "Render screen space shadows", CustomSamplerId.ScreenSpaceShadows.GetSampler()))

{

RenderScreenSpaceShadows(hdCamera, cmd);

}

// Contact shadows needs the light loop so we do them after the build light list

void DispatchContactShadows()

{

if (hdCamera.frameSettings.ContactShadowsRunAsync())

{

contactShadowsTask.Start(cmd, renderContext, ContactShadowStartCallback, !haveAsyncTaskWithShadows);

haveAsyncTaskWithShadows = true;

void ContactShadowStartCallback(CommandBuffer asyncCmd)

{

var firstMipOffsetY = m_SharedRTManager.GetDepthBufferMipChainInfo().mipLevelOffsets[1].y;

RenderContactShadows(hdCamera, m_ContactShadowBuffer, hdCamera.frameSettings.IsEnabled(FrameSettingsField.MSAA) ? m_SharedRTManager.GetDepthValuesTexture() : m_SharedRTManager.GetDepthTexture(), firstMipOffsetY, asyncCmd);

}

}

else

{

HDUtils.CheckRTCreated(m_ContactShadowBuffer);

int firstMipOffsetY = m_SharedRTManager.GetDepthBufferMipChainInfo().mipLevelOffsets[1].y;

RenderContactShadows(hdCamera, m_ContactShadowBuffer, hdCamera.frameSettings.IsEnabled(FrameSettingsField.MSAA) ? m_SharedRTManager.GetDepthValuesTexture() : m_SharedRTManager.GetDepthTexture(), firstMipOffsetY, cmd);

PushFullScreenDebugTexture(hdCamera, cmd, m_ContactShadowBuffer, FullScreenDebugMode.ContactShadows);

}

}

{

// Set fog parameters for volumetric lighting.

var visualEnv = VolumeManager.instance.stack.GetComponent<VisualEnvironment>();

visualEnv.PushFogShaderParameters(hdCamera, cmd);

}

if (hdCamera.frameSettings.VolumeVoxelizationRunsAsync())

{

volumeVoxelizationTask.End(cmd);

}

else

{

// Perform the voxelization step which fills the density 3D texture.

VolumeVoxelizationPass(hdCamera, cmd, m_FrameCount, densityVolumes);

}

// Render the volumetric lighting.

// The pass requires the volume properties, the light list and the shadows, and can run async.

VolumetricLightingPass(hdCamera, cmd, m_FrameCount);

SetMicroShadowingSettings(cmd);

if (hdCamera.frameSettings.SSAORunsAsync())

{

SSAOTask.EndWithPostWork(cmd, Callback);

void Callback() => m_AmbientOcclusionSystem.PostDispatchWork(cmd, hdCamera, m_SharedRTManager);

}

if (hdCamera.frameSettings.ContactShadowsRunAsync())

{

contactShadowsTask.EndWithPostWork(cmd, Callback);

void Callback()

{

SetContactShadowsTexture(hdCamera, m_ContactShadowBuffer, cmd);

PushFullScreenDebugTexture(hdCamera, cmd, m_ContactShadowBuffer, FullScreenDebugMode.ContactShadows);

}

}

else

{

SetContactShadowsTexture(hdCamera, m_ContactShadowBuffer, cmd);

}

if (hdCamera.frameSettings.SSRRunsAsync())

{

SSRTask.End(cmd);

}

hdCamera.xr.StartLegacyStereo(camera, cmd, renderContext);

RenderDeferredLighting(hdCamera, cmd);

RenderForwardOpaque(cullingResults, hdCamera, renderContext, cmd);

m_SharedRTManager.ResolveMSAAColor(cmd, hdCamera, m_CameraSssDiffuseLightingMSAABuffer, m_CameraSssDiffuseLightingBuffer);

m_SharedRTManager.ResolveMSAAColor(cmd, hdCamera, GetSSSBufferMSAA(), GetSSSBuffer());

// SSS pass here handle both SSS material from deferred and forward

RenderSubsurfaceScattering(hdCamera, cmd, hdCamera.frameSettings.IsEnabled(FrameSettingsField.MSAA) ? m_CameraColorMSAABuffer : m_CameraColorBuffer,

m_CameraSssDiffuseLightingBuffer, m_SharedRTManager.GetDepthStencilBuffer(hdCamera.frameSettings.IsEnabled(FrameSettingsField.MSAA)), m_SharedRTManager.GetDepthTexture());

RenderForwardEmissive(cullingResults, hdCamera, renderContext, cmd);

RenderSky(hdCamera, cmd);

RenderTransparentDepthPrepass(cullingResults, hdCamera, renderContext, cmd);

#if ENABLE_RAYTRACING

m_RaytracingRenderer.Render(hdCamera, cmd, m_CameraColorBuffer, renderContext, cullingResults);

#endif

// Render pre refraction objects

RenderForwardTransparent(cullingResults, hdCamera, true, renderContext, cmd);

if (hdCamera.frameSettings.IsEnabled(FrameSettingsField.RoughRefraction))

{

// First resolution of the color buffer for the color pyramid

m_SharedRTManager.ResolveMSAAColor(cmd, hdCamera, m_CameraColorMSAABuffer, m_CameraColorBuffer);

RenderColorPyramid(hdCamera, cmd, true);

}

// Bind current color pyramid for shader graph SceneColorNode on transparent objects

var currentColorPyramid = hdCamera.GetCurrentFrameRT((int)HDCameraFrameHistoryType.ColorBufferMipChain);

cmd.SetGlobalTexture(HDShaderIDs._ColorPyramidTexture, currentColorPyramid);

// Render all type of transparent forward (unlit, lit, complex (hair...)) to keep the sorting between transparent objects.

RenderForwardTransparent(cullingResults, hdCamera, false, renderContext, cmd);

// We push the motion vector debug texture here as transparent object can overwrite the motion vector texture content.

if(m_Asset.currentPlatformRenderPipelineSettings.supportMotionVectors)

PushFullScreenDebugTexture(hdCamera, cmd, m_SharedRTManager.GetMotionVectorsBuffer(), FullScreenDebugMode.MotionVectors);

// Second resolve the color buffer for finishing the frame

m_SharedRTManager.ResolveMSAAColor(cmd, hdCamera, m_CameraColorMSAABuffer, m_CameraColorBuffer);

// Render All forward error

RenderForwardError(cullingResults, hdCamera, renderContext, cmd);

DownsampleDepthForLowResTransparency(hdCamera, cmd);

RenderLowResTransparent(cullingResults, hdCamera, renderContext, cmd);

UpsampleTransparent(hdCamera, cmd);

// Fill depth buffer to reduce artifact for transparent object during postprocess

RenderTransparentDepthPostpass(cullingResults, hdCamera, renderContext, cmd);

RenderColorPyramid(hdCamera, cmd, false);

AccumulateDistortion(cullingResults, hdCamera, renderContext, cmd);

RenderDistortion(hdCamera, cmd);

PushFullScreenDebugTexture(hdCamera, cmd, m_CameraColorBuffer, FullScreenDebugMode.NanTracker);

PushFullScreenLightingDebugTexture(hdCamera, cmd, m_CameraColorBuffer);

#if UNITY_EDITOR

// Render gizmos that should be affected by post processes

if (showGizmos)

{

Gizmos.exposure = m_PostProcessSystem.GetExposureTexture(hdCamera).rt;

RenderGizmos(cmd, camera, renderContext, GizmoSubset.PreImageEffects);

}

#endif

}

// At this point, m_CameraColorBuffer has been filled by either debug views are regular rendering so we can push it here.

PushColorPickerDebugTexture(cmd, hdCamera, m_CameraColorBuffer);

aovRequest.PushCameraTexture(cmd, AOVBuffers.Color, hdCamera, m_CameraColorBuffer, aovBuffers);

RenderPostProcess(cullingResults, hdCamera, target.id, renderContext, cmd);

// In developer build, we always render post process in m_AfterPostProcessBuffer at (0,0) in which we will then render debug.

// Because of this, we need another blit here to the final render target at the right viewport.

if (!HDUtils.PostProcessIsFinalPass() || aovRequest.isValid)

{

hdCamera.ExecuteCaptureActions(m_IntermediateAfterPostProcessBuffer, cmd);

RenderDebug(hdCamera, cmd, cullingResults);

using (new ProfilingSample(cmd, "Final Blit (Dev Build Only)"))

{

var finalBlitParams = PrepareFinalBlitParameters(hdCamera);

BlitFinalCameraTexture(finalBlitParams, m_BlitPropertyBlock, m_IntermediateAfterPostProcessBuffer, target.id, cmd);

}

aovRequest.PushCameraTexture(cmd, AOVBuffers.Output, hdCamera, m_IntermediateAfterPostProcessBuffer, aovBuffers);

}

// XR mirror view and blit do device

hdCamera.xr.StopLegacyStereo(camera, cmd, renderContext);

hdCamera.xr.EndCamera(hdCamera, renderContext, cmd);

// Send all required graphics buffer to client systems.

SendGraphicsBuffers(cmd, hdCamera);

// Due to our RT handle system we don't write into the backbuffer depth buffer (as our depth buffer can be bigger than the one provided)

// So we need to do a copy of the corresponding part of RT depth buffer in the target depth buffer in various situation:

// - RenderTexture (camera.targetTexture != null) has a depth buffer (camera.targetTexture.depth != 0)

// - We are rendering into the main game view (i.e not a RenderTexture camera.cameraType == CameraType.Game && hdCamera.camera.targetTexture == null) in the editor for allowing usage of Debug.DrawLine and Debug.Ray.

// - We draw Gizmo/Icons in the editor (hdCamera.camera.targetTexture != null && camera.targetTexture.depth != 0 - The Scene view has a targetTexture and a depth texture)

// TODO: If at some point we get proper render target aliasing, we will be able to use the provided depth texture directly with our RT handle system

// Note: Debug.DrawLine and Debug.Ray only work in editor, not in player

var copyDepth = hdCamera.camera.targetTexture != null && hdCamera.camera.targetTexture.depth != 0;

#if UNITY_EDITOR

copyDepth = copyDepth || hdCamera.isMainGameView; // Specific case of Debug.DrawLine and Debug.Ray

#endif

if (copyDepth)

{

using (new ProfilingSample(cmd, "Copy Depth in Target Texture", CustomSamplerId.CopyDepth.GetSampler()))

{

cmd.SetRenderTarget(target.id);

cmd.SetViewport(hdCamera.finalViewport);

m_CopyDepthPropertyBlock.SetTexture(HDShaderIDs._InputDepth, m_SharedRTManager.GetDepthStencilBuffer());

// When we are Main Game View we need to flip the depth buffer ourselves as we are after postprocess / blit that have already flipped the screen

m_CopyDepthPropertyBlock.SetInt("_FlipY", hdCamera.isMainGameView ? 1 : 0);

CoreUtils.DrawFullScreen(cmd, m_CopyDepth, m_CopyDepthPropertyBlock);

}

}

aovRequest.PushCameraTexture(cmd, AOVBuffers.DepthStencil, hdCamera, m_SharedRTManager.GetDepthStencilBuffer(), aovBuffers);

aovRequest.PushCameraTexture(cmd, AOVBuffers.Normals, hdCamera, m_SharedRTManager.GetNormalBuffer(), aovBuffers);

if (m_Asset.currentPlatformRenderPipelineSettings.supportMotionVectors)

aovRequest.PushCameraTexture(cmd, AOVBuffers.MotionVectors, hdCamera, m_SharedRTManager.GetMotionVectorsBuffer(), aovBuffers);

#if UNITY_EDITOR

// We need to make sure the viewport is correctly set for the editor rendering. It might have been changed by debug overlay rendering just before.

cmd.SetViewport(hdCamera.finalViewport);

// Render overlay Gizmos

if (showGizmos)

RenderGizmos(cmd, camera, renderContext, GizmoSubset.PostImageEffects);

#endif

aovRequest.Execute(cmd, aovBuffers, RenderOutputProperties.From(hdCamera));

}

}ClearBuffers(hdCamera, cmd);``RenderDBuffer(hdCamera, cmd, renderContext, cullingResults);``RenderGBuffer(cullingResults, hdCamera, renderContext, cmd);``RenderCameraMotionVectors(cullingResults, hdCamera, renderContext, cmd);``RenderShadowMaps(renderContext, cmd, cullingResults, hdCamera);などなど。

各パスがメソッドとして切り出されてレンダリングパスが作られているのがわかります。

この中に自分で書いたメソッドを混ぜることでレンダリングパスを追加できます。

HDRP改造の準備

まずは普通にHDRPのプロジェクトを立ち上げます。 Unityのバージョンは2019.2.6f1です。

GitHubからTag 6.9.1のScriptableRenderPipelineプロジェクトをダウンロードしてきます。

プロジェクトルートなど適当な場所に com.unity.render-pipelines.high-definitionを配置したら、 Packages/manifest.jsonを書き換えます。

{

"dependencies": {

"com.unity.collab-proxy": "1.2.16",

"com.unity.ext.nunit": "1.0.0",

"com.unity.ide.rider": "1.1.0",

"com.unity.ide.vscode": "1.1.0",

"com.unity.package-manager-ui": "2.2.0",

- "com.unity.render-pipelines.high-definition": "6.9.0-preview",

+ "com.unity.render-pipelines.high-definition": "file:../com.unity.render-pipelines.high-definition",

"com.unity.test-framework": "1.0.13",

"com.unity.textmeshpro": "2.0.1",

"com.unity.timeline": "1.1.0",

"com.unity.ugui": "1.0.0",

"com.unity.modules.ai": "1.0.0",

"com.unity.modules.androidjni": "1.0.0",

"com.unity.modules.animation": "1.0.0",

"com.unity.modules.assetbundle": "1.0.0",

"com.unity.modules.audio": "1.0.0",

"com.unity.modules.cloth": "1.0.0",

"com.unity.modules.director": "1.0.0",

"com.unity.modules.imageconversion": "1.0.0",

"com.unity.modules.imgui": "1.0.0",

"com.unity.modules.jsonserialize": "1.0.0",

"com.unity.modules.particlesystem": "1.0.0",

"com.unity.modules.physics": "1.0.0",

"com.unity.modules.physics2d": "1.0.0",

"com.unity.modules.screencapture": "1.0.0",

"com.unity.modules.terrain": "1.0.0",

"com.unity.modules.terrainphysics": "1.0.0",

"com.unity.modules.tilemap": "1.0.0",

"com.unity.modules.ui": "1.0.0",

"com.unity.modules.uielements": "1.0.0",

"com.unity.modules.umbra": "1.0.0",

"com.unity.modules.unityanalytics": "1.0.0",

"com.unity.modules.unitywebrequest": "1.0.0",

"com.unity.modules.unitywebrequestassetbundle": "1.0.0",

"com.unity.modules.unitywebrequestaudio": "1.0.0",

"com.unity.modules.unitywebrequesttexture": "1.0.0",

"com.unity.modules.unitywebrequestwww": "1.0.0",

"com.unity.modules.vehicles": "1.0.0",

"com.unity.modules.video": "1.0.0",

"com.unity.modules.vr": "1.0.0",

"com.unity.modules.wind": "1.0.0",

"com.unity.modules.xr": "1.0.0"

}

}これでUnityのプロジェクトを立ち上げて何もエラーが出ることなく起動できれば成功です。 ローカルに配置したHDRPが利用されているので、これを書き換えていけばよいです。

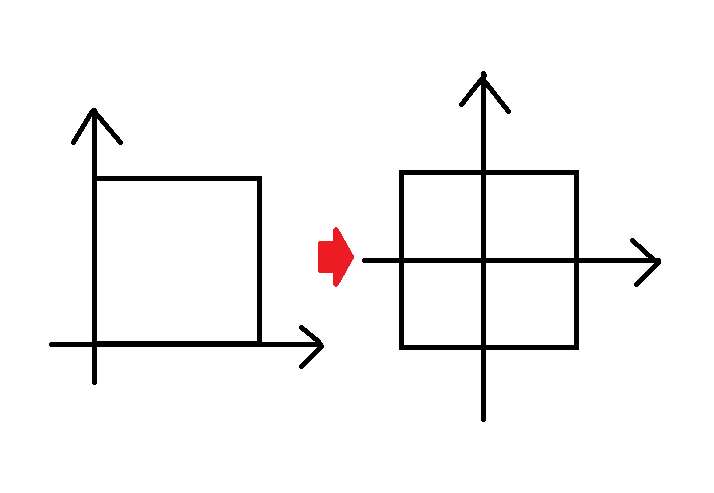

独自パスでG-Bufferに書き込みをする

新しいパス用にLit.shaderをベースに書き換えたシェーダを作ります。

Shader "HDRP/MyPass"

{

Properties

{

...

}

HLSLINCLUDE

...

ENDHLSL

SubShader

{

// This tags allow to use the shader replacement features

Tags{ "RenderPipeline"="HDRenderPipeline" "RenderType" = "HDLitShader" }

Pass

{

Name "SceneSelectionPass"

Tags { "LightMode" = "SceneSelectionPass" }

...

}

// Extracts information for lightmapping, GI (emission, albedo, ...)

// This pass it not used during regular rendering.

Pass

{

Name "META"

Tags{ "LightMode" = "META" }

...

}

Pass

{

Name "ShadowCaster"

Tags{ "LightMode" = "ShadowCaster" }

...

}

Pass

{

Name "DepthOnly"

Tags{ "LightMode" = "DepthOnly" }

...

}

Pass

{

Name "MotionVectors"

Tags{ "LightMode" = "MotionVectors" } // Caution, this need to be call like this to setup the correct parameters by C++ (legacy Unity)

...

}

Pass

{

Name "DistortionVectors"

Tags { "LightMode" = "DistortionVectors" } // This will be only for transparent object based on the RenderQueue index

...

}

Pass

{

Name "MyPass"

Tags { "lightMode" = "MyPass" }

Cull Back

ZTest On

ZWrite On

Stencil

{

WriteMask [_StencilWriteMaskGBuffer]

Ref [_StencilRefGBuffer]

Comp Always

Pass Replace

}

HLSLPROGRAM

#pragma multi_compile _ DEBUG_DISPLAY

#pragma multi_compile _ LIGHTMAP_ON

#pragma multi_compile _ DIRLIGHTMAP_COMBINED

#pragma multi_compile _ DYNAMICLIGHTMAP_ON

#pragma multi_compile _ SHADOWS_SHADOWMASK

// Setup DECALS_OFF so the shader stripper can remove variants

#pragma multi_compile DECALS_OFF DECALS_3RT DECALS_4RT

#pragma multi_compile _ LIGHT_LAYERS

#define SHADERPASS SHADERPASS_GBUFFER

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Material.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/Lit.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/ShaderPass/LitSharePass.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/LitData.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/RenderPipeline/ShaderPass/VertMesh.hlsl"

PackedVaryingsType Vert(AttributesMesh inputMesh)

{

VaryingsType varyingsType;

varyingsType.vmesh = VertMesh(inputMesh);

return PackVaryingsType(varyingsType);

}

#ifdef TESSELLATION_ON

PackedVaryingsToPS VertTesselation(VaryingsToDS input)

{

VaryingsToPS output;

output.vmesh = VertMeshTesselation(input.vmesh);

return PackVaryingsToPS(output);

}

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/RenderPipeline/ShaderPass/TessellationShare.hlsl"

#endif // TESSELLATION_ON

void Frag( PackedVaryingsToPS packedInput,

OUTPUT_GBUFFER(outGBuffer)

#ifdef _DEPTHOFFSET_ON

, out float outputDepth : SV_Depth

#endif

)

{

UNITY_SETUP_STEREO_EYE_INDEX_POST_VERTEX(packedInput);

FragInputs input = UnpackVaryingsMeshToFragInputs(packedInput.vmesh);

// input.positionSS is SV_Position

PositionInputs posInput = GetPositionInput(input.positionSS.xy, _ScreenSize.zw, input.positionSS.z, input.positionSS.w, input.positionRWS);

#ifdef VARYINGS_NEED_POSITION_WS

float3 V = GetWorldSpaceNormalizeViewDir(input.positionRWS);

#else

// Unused

float3 V = float3(1.0, 1.0, 1.0); // Avoid the division by 0

#endif

SurfaceData surfaceData;

BuiltinData builtinData;

GetSurfaceAndBuiltinData(input, V, posInput, surfaceData, builtinData);

// surfaceDataを弄る

surfaceData.baseColor = float3(1.0, 0.0, 0.0);

surfaceData.specularOcclusion = 1.0;

// surfaceData.normalWS

surfaceData.perceptualSmoothness = 1.0;

surfaceData.ambientOcclusion = 0.0;

surfaceData.metallic = 1.0;

surfaceData.coatMask = 1.0;

// surfaceDataを弄るここまで

ENCODE_INTO_GBUFFER(surfaceData, builtinData, posInput.positionSS, outGBuffer);

#ifdef _DEPTHOFFSET_ON

outputDepth = posInput.deviceDepth;

#endif

}

#pragma vertex Vert

#pragma fragment Frag

ENDHLSL

}

}

// CustomEditor "Experimental.Rendering.HDPipeline.LitGUI"

}使わないであろうGbufferパスやForwardパスTransparent系のパスを取り除きました。 また、レイトレは使わないのでDXR系もいったん取り除いてしまいます。

最後にMyPassとして新しいパスを追加しています。 このパスはGBufferのパスをベースにしています。 GBufferのパスは次に書かれているものを参考にしました。

FragでSurfaceDataを取得したあとに上書きをしています。

void Frag( PackedVaryingsToPS packedInput,

OUTPUT_GBUFFER(outGBuffer)

#ifdef _DEPTHOFFSET_ON

, out float outputDepth : SV_Depth

#endif

)

{

UNITY_SETUP_STEREO_EYE_INDEX_POST_VERTEX(packedInput);

FragInputs input = UnpackVaryingsMeshToFragInputs(packedInput.vmesh);

// input.positionSS is SV_Position

PositionInputs posInput = GetPositionInput(input.positionSS.xy, _ScreenSize.zw, input.positionSS.z, input.positionSS.w, input.positionRWS);

#ifdef VARYINGS_NEED_POSITION_WS

float3 V = GetWorldSpaceNormalizeViewDir(input.positionRWS);

#else

// Unused

float3 V = float3(1.0, 1.0, 1.0); // Avoid the division by 0

#endif

SurfaceData surfaceData;

BuiltinData builtinData;

GetSurfaceAndBuiltinData(input, V, posInput, surfaceData, builtinData);

// surfaceDataを弄る

surfaceData.baseColor = float3(1.0, 0.0, 0.0);

surfaceData.specularOcclusion = 1.0;

// surfaceData.normalWS

surfaceData.perceptualSmoothness = 1.0;

surfaceData.ambientOcclusion = 0.0;

surfaceData.metallic = 1.0;

surfaceData.coatMask = 1.0;

// surfaceDataを弄るここまで

ENCODE_INTO_GBUFFER(surfaceData, builtinData, posInput.positionSS, outGBuffer);

#ifdef _DEPTHOFFSET_ON

outputDepth = posInput.deviceDepth;

#endif

}ちなみにSurfaceDataについては次のとおり。

ScriptableRenderPipeline/Lit.cs at 6.9.1 · Unity-Technologies/ScriptableRenderPipeline

// Main structure that store the user data (i.e user input of master node in material graph)

[GenerateHLSL(PackingRules.Exact, false, false, true, 1000)]

public struct SurfaceData

{

[SurfaceDataAttributes("MaterialFeatures")]

public uint materialFeatures;

// Standard

[MaterialSharedPropertyMapping(MaterialSharedProperty.Albedo)]

[SurfaceDataAttributes("Base Color", false, true, FieldPrecision.Real)]

public Vector3 baseColor;

[SurfaceDataAttributes("Specular Occlusion", precision = FieldPrecision.Real)]

public float specularOcclusion;

[MaterialSharedPropertyMapping(MaterialSharedProperty.Normal)]

[SurfaceDataAttributes(new string[] {"Normal", "Normal View Space"}, true)]

public Vector3 normalWS;

[MaterialSharedPropertyMapping(MaterialSharedProperty.Smoothness)]

[SurfaceDataAttributes("Smoothness", precision = FieldPrecision.Real)]

public float perceptualSmoothness;

[MaterialSharedPropertyMapping(MaterialSharedProperty.AmbientOcclusion)]

[SurfaceDataAttributes("Ambient Occlusion", precision = FieldPrecision.Real)]

public float ambientOcclusion;

[MaterialSharedPropertyMapping(MaterialSharedProperty.Metal)]

[SurfaceDataAttributes("Metallic", precision = FieldPrecision.Real)]

public float metallic;

[SurfaceDataAttributes("Coat mask", precision = FieldPrecision.Real)]

public float coatMask;

// MaterialFeature dependent attribute

// Specular Color

[MaterialSharedPropertyMapping(MaterialSharedProperty.Specular)]

[SurfaceDataAttributes("Specular Color", false, true, FieldPrecision.Real)]

public Vector3 specularColor;

// SSS

[SurfaceDataAttributes("Diffusion Profile Hash")]

public uint diffusionProfileHash;

[SurfaceDataAttributes("Subsurface Mask", precision = FieldPrecision.Real)]

public float subsurfaceMask;

// Transmission

// + Diffusion Profile

[SurfaceDataAttributes("Thickness", precision = FieldPrecision.Real)]

public float thickness;

// Anisotropic

[SurfaceDataAttributes("Tangent", true)]

public Vector3 tangentWS;

[SurfaceDataAttributes("Anisotropy", precision = FieldPrecision.Real)]

public float anisotropy; // anisotropic ratio(0->no isotropic; 1->full anisotropy in tangent direction, -1->full anisotropy in bitangent direction)

// Iridescence

[SurfaceDataAttributes("Iridescence Layer Thickness", precision = FieldPrecision.Real)]

public float iridescenceThickness;

[SurfaceDataAttributes("Iridescence Mask", precision = FieldPrecision.Real)]

public float iridescenceMask;

// Forward property only

[SurfaceDataAttributes(new string[] { "Geometric Normal", "Geometric Normal View Space" }, true)]

public Vector3 geomNormalWS;

// Transparency

// Reuse thickness from SSS

[SurfaceDataAttributes("Index of refraction", precision = FieldPrecision.Real)]

public float ior;

[SurfaceDataAttributes("Transmittance Color", precision = FieldPrecision.Real)]

public Vector3 transmittanceColor;

[SurfaceDataAttributes("Transmittance Absorption Distance", precision = FieldPrecision.Real)]

public float atDistance;

[SurfaceDataAttributes("Transmittance mask", precision = FieldPrecision.Real)]

public float transmittanceMask;

};[GenerateHLSL]ってついているので

このCSharpのコードからHLSLを生成しているのだと思います。

これで余計なパスを取り除きMyPassを追加したシェーダが完成しました。 このシェーダ用のパスをレンダリングパスに追加していきます。

ExecuteRenderRequestのなかの1638行目、 RenderGBufferの次にRenderMyPassというのを追加してみます。

// This will bind the depth buffer if needed for DBuffer)

RenderDBuffer(hdCamera, cmd, renderContext, cullingResults);

// We can call DBufferNormalPatch after RenderDBuffer as it only affect forward material and isn't affected by RenderGBuffer

// This reduce lifteime of stencil bit

DBufferNormalPatch(hdCamera, cmd, renderContext, cullingResults);

#if ENABLE_RAYTRACING

bool validIndirectDiffuse = m_RaytracingIndirectDiffuse.ValidIndirectDiffuseState();

cmd.SetGlobalInt(HDShaderIDs._RaytracedIndirectDiffuse, validIndirectDiffuse ? 1 : 0);

#endif

RenderGBuffer(cullingResults, hdCamera, renderContext, cmd);

+ RenderMyPass(cullingResults, hdCamera, renderContext, cmd);

// We can now bind the normal buffer to be use by any effect

m_SharedRTManager.BindNormalBuffer(cmd);RenderMyPassをRenderGBufferを参考に実装します。

void RenderMyPass(CullingResults cull, HDCamera hdCamera, ScriptableRenderContext renderContext, CommandBuffer cmd)

{

if (hdCamera.frameSettings.litShaderMode != LitShaderMode.Deferred)

return;

using (new ProfilingSample(cmd, m_CurrentDebugDisplaySettings.IsDebugDisplayEnabled() ? "MyPass Debug" : "MyPass", CustomSamplerId.GBuffer.GetSampler()))

{

// setup GBuffer for rendering

HDUtils.SetRenderTarget(cmd, m_GbufferManager.GetBuffersRTI(hdCamera.frameSettings), m_SharedRTManager.GetDepthStencilBuffer());

var rendererList = RendererList.Create(CreateOpaqueRendererListDesc(cull, hdCamera.camera, HDShaderPassNames.s_MyPassName, m_CurrentRendererConfigurationBakedLighting));

DrawOpaqueRendererList(renderContext, cmd, hdCamera.frameSettings, rendererList);

m_GbufferManager.BindBufferAsTextures(cmd);

}

}HDCustomSamplerId.csに列挙体を追加します。

namespace UnityEngine.Experimental.Rendering.HDPipeline

{

public enum CustomSamplerId

{

PushGlobalParameters,

CopySetDepthBuffer,

CopyDepthBuffer,

HTileForSSS,

Forward,

RenderSSAO,

ResolveSSAO,

RenderShadowMaps,

ScreenSpaceShadows,

BuildLightList,

BlitToFinalRT,

Distortion,

ApplyDistortion,

DepthPrepass,

TransparentDepthPrepass,

GBuffer,

+ MyPass,

DBufferRender,

DBufferPrepareDrawData,

...HDStringConstants.csに文字列とIDを追加します。

public static class HDShaderPassNames

{

// ShaderPass string - use to have consistent name through the code

public static readonly string s_EmptyStr = "";

public static readonly string s_ForwardStr = "Forward";

public static readonly string s_DepthOnlyStr = "DepthOnly";

public static readonly string s_DepthForwardOnlyStr = "DepthForwardOnly";

public static readonly string s_ForwardOnlyStr = "ForwardOnly";

public static readonly string s_GBufferStr = "GBuffer";

+ public static readonly string s_MyPassStr = "MyPass";

public static readonly string s_GBufferWithPrepassStr = "GBufferWithPrepass";

...

// ShaderPass name

public static readonly ShaderTagId s_EmptyName = new ShaderTagId(s_EmptyStr);

public static readonly ShaderTagId s_ForwardName = new ShaderTagId(s_ForwardStr);

public static readonly ShaderTagId s_DepthOnlyName = new ShaderTagId(s_DepthOnlyStr);

public static readonly ShaderTagId s_DepthForwardOnlyName = new ShaderTagId(s_DepthForwardOnlyStr);

public static readonly ShaderTagId s_ForwardOnlyName = new ShaderTagId(s_ForwardOnlyStr);

public static readonly ShaderTagId s_GBufferName = new ShaderTagId(s_GBufferStr);

+ public static readonly ShaderTagId s_MyPassName = new ShaderTagId(s_MyPassStr);

public static readonly ShaderTagId s_GBufferWithPrepassName = new ShaderTagId(s_GBufferWithPrepassStr);

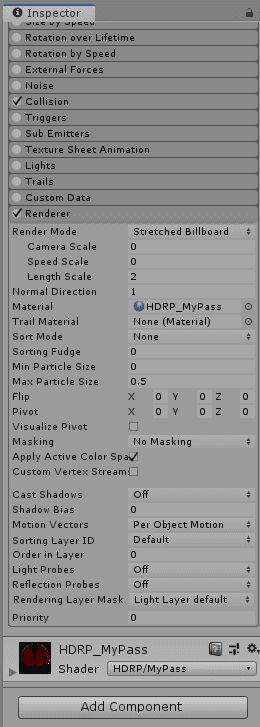

...MyPassシェーダからMaterialを作成し、適当なオブジェクトに割り当てると 真っ赤な金属のマテリアルとして表示されるようになりました。

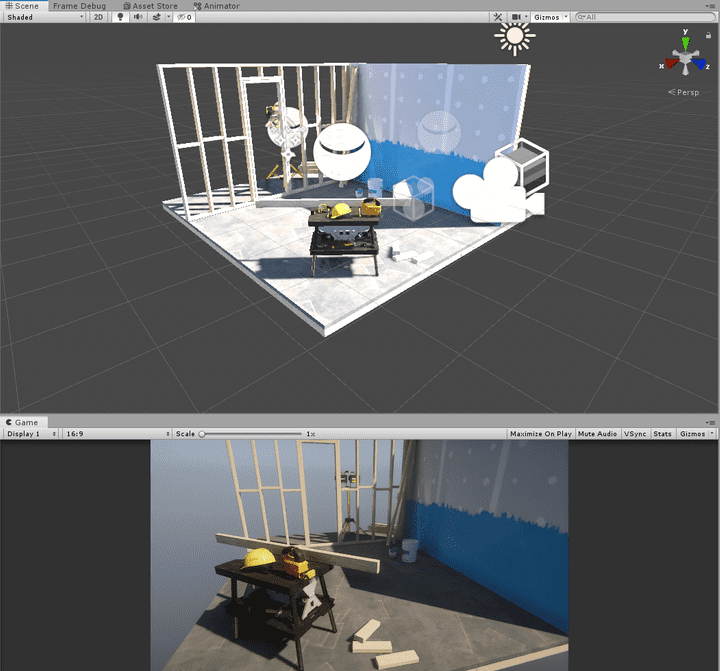

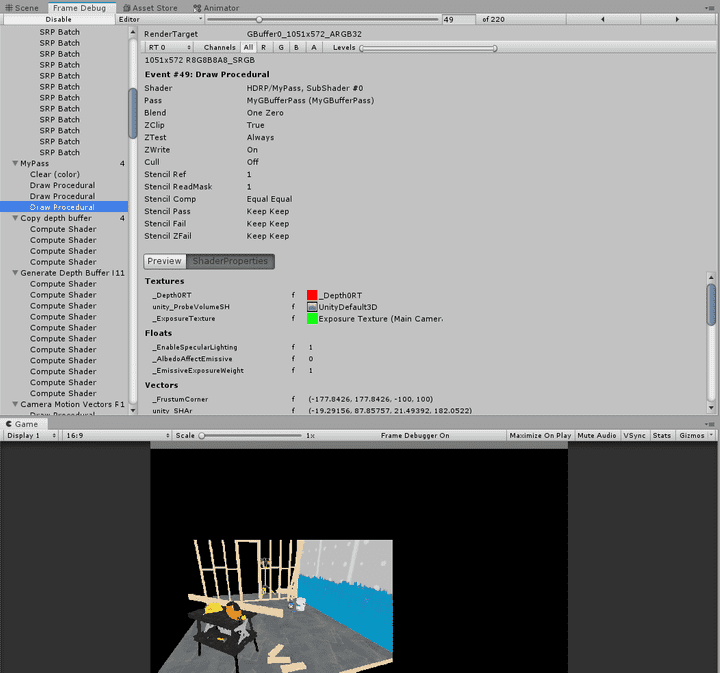

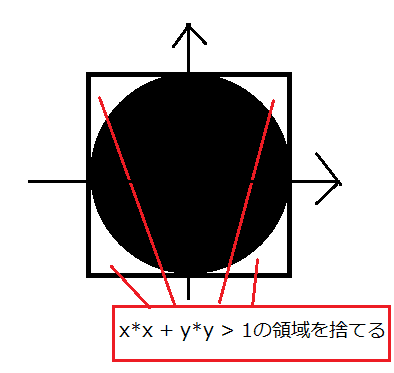

擬似流体を描画するパスを作る

以前に従来のパイプライン用に実装した擬似流体レンダリングをHDRP用に作ってみます。

UnityでCommandBufferを利用して流体のレンダリングを行う | 測度ゼロの抹茶チョコ

プログラム全文

まず最初に改造したプログラムをすべて載せてしまいます。 解説はあとから行います。

MyPass.shaderを作成しました。

Shader "HDRP/MyPass"

{

Properties

{

// Versioning of material to help for upgrading

[HideInInspector] _HdrpVersion("_HdrpVersion", Float) = 2

// Following set of parameters represent the parameters node inside the MaterialGraph.

// They are use to fill a SurfaceData. With a MaterialGraph this should not exist.

// Reminder. Color here are in linear but the UI (color picker) do the conversion sRGB to linear

_BaseColor("BaseColor", Color) = (1,1,1,1)

_BaseColorMap("BaseColorMap", 2D) = "white" {}

[HideInInspector] _BaseColorMap_MipInfo("_BaseColorMap_MipInfo", Vector) = (0, 0, 0, 0)

_Metallic("_Metallic", Range(0.0, 1.0)) = 0

_Smoothness("Smoothness", Range(0.0, 1.0)) = 0.5

_MaskMap("MaskMap", 2D) = "white" {}

_SmoothnessRemapMin("SmoothnessRemapMin", Float) = 0.0

_SmoothnessRemapMax("SmoothnessRemapMax", Float) = 1.0

_AORemapMin("AORemapMin", Float) = 0.0

_AORemapMax("AORemapMax", Float) = 1.0

_NormalMap("NormalMap", 2D) = "bump" {} // Tangent space normal map

_NormalMapOS("NormalMapOS", 2D) = "white" {} // Object space normal map - no good default value

_NormalScale("_NormalScale", Range(0.0, 8.0)) = 1

_BentNormalMap("_BentNormalMap", 2D) = "bump" {}

_BentNormalMapOS("_BentNormalMapOS", 2D) = "white" {}

_HeightMap("HeightMap", 2D) = "black" {}

// Caution: Default value of _HeightAmplitude must be (_HeightMax - _HeightMin) * 0.01

// Those two properties are computed from the ones exposed in the UI and depends on the displaement mode so they are separate because we don't want to lose information upon displacement mode change.

[HideInInspector] _HeightAmplitude("Height Amplitude", Float) = 0.02 // In world units. This will be computed in the UI.

[HideInInspector] _HeightCenter("Height Center", Range(0.0, 1.0)) = 0.5 // In texture space

[Enum(MinMax, 0, Amplitude, 1)] _HeightMapParametrization("Heightmap Parametrization", Int) = 0

// These parameters are for vertex displacement/Tessellation

_HeightOffset("Height Offset", Float) = 0

// MinMax mode

_HeightMin("Heightmap Min", Float) = -1

_HeightMax("Heightmap Max", Float) = 1

// Amplitude mode

_HeightTessAmplitude("Amplitude", Float) = 2.0 // in Centimeters

_HeightTessCenter("Height Center", Range(0.0, 1.0)) = 0.5 // In texture space

// These parameters are for pixel displacement

_HeightPoMAmplitude("Height Amplitude", Float) = 2.0 // In centimeters

_DetailMap("DetailMap", 2D) = "black" {}

_DetailAlbedoScale("_DetailAlbedoScale", Range(0.0, 2.0)) = 1

_DetailNormalScale("_DetailNormalScale", Range(0.0, 2.0)) = 1

_DetailSmoothnessScale("_DetailSmoothnessScale", Range(0.0, 2.0)) = 1

_TangentMap("TangentMap", 2D) = "bump" {}

_TangentMapOS("TangentMapOS", 2D) = "white" {}

_Anisotropy("Anisotropy", Range(-1.0, 1.0)) = 0

_AnisotropyMap("AnisotropyMap", 2D) = "white" {}

_SubsurfaceMask("Subsurface Radius", Range(0.0, 1.0)) = 1.0

_SubsurfaceMaskMap("Subsurface Radius Map", 2D) = "white" {}

_Thickness("Thickness", Range(0.0, 1.0)) = 1.0

_ThicknessMap("Thickness Map", 2D) = "white" {}

_ThicknessRemap("Thickness Remap", Vector) = (0, 1, 0, 0)

_IridescenceThickness("Iridescence Thickness", Range(0.0, 1.0)) = 1.0

_IridescenceThicknessMap("Iridescence Thickness Map", 2D) = "white" {}

_IridescenceThicknessRemap("Iridescence Thickness Remap", Vector) = (0, 1, 0, 0)

_IridescenceMask("Iridescence Mask", Range(0.0, 1.0)) = 1.0

_IridescenceMaskMap("Iridescence Mask Map", 2D) = "white" {}

_CoatMask("Coat Mask", Range(0.0, 1.0)) = 0.0

_CoatMaskMap("CoatMaskMap", 2D) = "white" {}

[ToggleUI] _EnergyConservingSpecularColor("_EnergyConservingSpecularColor", Float) = 1.0

_SpecularColor("SpecularColor", Color) = (1, 1, 1, 1)

_SpecularColorMap("SpecularColorMap", 2D) = "white" {}

// Following options are for the GUI inspector and different from the input parameters above

// These option below will cause different compilation flag.

[ToggleUI] _EnableSpecularOcclusion("Enable specular occlusion", Float) = 0.0

[HDR] _EmissiveColor("EmissiveColor", Color) = (0, 0, 0)

// Used only to serialize the LDR and HDR emissive color in the material UI,

// in the shader only the _EmissiveColor should be used

[HideInInspector] _EmissiveColorLDR("EmissiveColor LDR", Color) = (0, 0, 0)

_EmissiveColorMap("EmissiveColorMap", 2D) = "white" {}

[ToggleUI] _AlbedoAffectEmissive("Albedo Affect Emissive", Float) = 0.0

[HideInInspector] _EmissiveIntensityUnit("Emissive Mode", Int) = 0

[ToggleUI] _UseEmissiveIntensity("Use Emissive Intensity", Int) = 0

_EmissiveIntensity("Emissive Intensity", Float) = 1

_EmissiveExposureWeight("Emissive Pre Exposure", Range(0.0, 1.0)) = 1.0

_DistortionVectorMap("DistortionVectorMap", 2D) = "black" {}

[ToggleUI] _DistortionEnable("Enable Distortion", Float) = 0.0

[ToggleUI] _DistortionDepthTest("Distortion Depth Test Enable", Float) = 1.0

[Enum(Add, 0, Multiply, 1, Replace, 2)] _DistortionBlendMode("Distortion Blend Mode", Int) = 0

[HideInInspector] _DistortionSrcBlend("Distortion Blend Src", Int) = 0

[HideInInspector] _DistortionDstBlend("Distortion Blend Dst", Int) = 0

[HideInInspector] _DistortionBlurSrcBlend("Distortion Blur Blend Src", Int) = 0

[HideInInspector] _DistortionBlurDstBlend("Distortion Blur Blend Dst", Int) = 0

[HideInInspector] _DistortionBlurBlendMode("Distortion Blur Blend Mode", Int) = 0

_DistortionScale("Distortion Scale", Float) = 1

_DistortionVectorScale("Distortion Vector Scale", Float) = 2

_DistortionVectorBias("Distortion Vector Bias", Float) = -1

_DistortionBlurScale("Distortion Blur Scale", Float) = 1

_DistortionBlurRemapMin("DistortionBlurRemapMin", Float) = 0.0

_DistortionBlurRemapMax("DistortionBlurRemapMax", Float) = 1.0

[ToggleUI] _UseShadowThreshold("_UseShadowThreshold", Float) = 0.0

[ToggleUI] _AlphaCutoffEnable("Alpha Cutoff Enable", Float) = 0.0

_AlphaCutoff("Alpha Cutoff", Range(0.0, 1.0)) = 0.5

_AlphaCutoffShadow("_AlphaCutoffShadow", Range(0.0, 1.0)) = 0.5

_AlphaCutoffPrepass("_AlphaCutoffPrepass", Range(0.0, 1.0)) = 0.5

_AlphaCutoffPostpass("_AlphaCutoffPostpass", Range(0.0, 1.0)) = 0.5

[ToggleUI] _TransparentDepthPrepassEnable("_TransparentDepthPrepassEnable", Float) = 0.0

[ToggleUI] _TransparentBackfaceEnable("_TransparentBackfaceEnable", Float) = 0.0

[ToggleUI] _TransparentDepthPostpassEnable("_TransparentDepthPostpassEnable", Float) = 0.0

_TransparentSortPriority("_TransparentSortPriority", Float) = 0

// Transparency

[Enum(None, 0, Box, 1, Sphere, 2)]_RefractionModel("Refraction Model", Int) = 0

[Enum(Proxy, 1, HiZ, 2)]_SSRefractionProjectionModel("Refraction Projection Model", Int) = 0

_Ior("Index Of Refraction", Range(1.0, 2.5)) = 1.0

_ThicknessMultiplier("Thickness Multiplier", Float) = 1.0

_TransmittanceColor("Transmittance Color", Color) = (1.0, 1.0, 1.0)

_TransmittanceColorMap("TransmittanceColorMap", 2D) = "white" {}

_ATDistance("Transmittance Absorption Distance", Float) = 1.0

[ToggleUI] _TransparentWritingMotionVec("_TransparentWritingMotionVec", Float) = 0.0

// Stencil state

// Forward

[HideInInspector] _StencilRef("_StencilRef", Int) = 2 // StencilLightingUsage.RegularLighting

[HideInInspector] _StencilWriteMask("_StencilWriteMask", Int) = 3 // StencilMask.Lighting

// GBuffer

[HideInInspector] _StencilRefGBuffer("_StencilRefGBuffer", Int) = 2 // StencilLightingUsage.RegularLighting

[HideInInspector] _StencilWriteMaskGBuffer("_StencilWriteMaskGBuffer", Int) = 3 // StencilMask.Lighting

// Depth prepass

[HideInInspector] _StencilRefDepth("_StencilRefDepth", Int) = 0 // Nothing

[HideInInspector] _StencilWriteMaskDepth("_StencilWriteMaskDepth", Int) = 32 // DoesntReceiveSSR

// Motion vector pass

[HideInInspector] _StencilRefMV("_StencilRefMV", Int) = 128 // StencilBitMask.ObjectMotionVectors

[HideInInspector] _StencilWriteMaskMV("_StencilWriteMaskMV", Int) = 128 // StencilBitMask.ObjectMotionVectors

// Distortion vector pass

[HideInInspector] _StencilRefDistortionVec("_StencilRefDistortionVec", Int) = 64 // StencilBitMask.DistortionVectors

[HideInInspector] _StencilWriteMaskDistortionVec("_StencilWriteMaskDistortionVec", Int) = 64 // StencilBitMask.DistortionVectors

// Blending state

[HideInInspector] _SurfaceType("__surfacetype", Float) = 0.0

[HideInInspector] _BlendMode("__blendmode", Float) = 0.0

[HideInInspector] _SrcBlend("__src", Float) = 1.0

[HideInInspector] _DstBlend("__dst", Float) = 0.0

[HideInInspector] _AlphaSrcBlend("__alphaSrc", Float) = 1.0

[HideInInspector] _AlphaDstBlend("__alphaDst", Float) = 0.0

[HideInInspector][ToggleUI] _ZWrite("__zw", Float) = 1.0

[HideInInspector] _CullMode("__cullmode", Float) = 2.0

[HideInInspector] _CullModeForward("__cullmodeForward", Float) = 2.0 // This mode is dedicated to Forward to correctly handle backface then front face rendering thin transparent

[Enum(UnityEditor.Experimental.Rendering.HDPipeline.TransparentCullMode)] _TransparentCullMode("_TransparentCullMode", Int) = 2 // Back culling by default

[HideInInspector] _ZTestDepthEqualForOpaque("_ZTestDepthEqualForOpaque", Int) = 4 // Less equal

[HideInInspector] _ZTestModeDistortion("_ZTestModeDistortion", Int) = 8

[HideInInspector] _ZTestGBuffer("_ZTestGBuffer", Int) = 4

[Enum(UnityEngine.Rendering.CompareFunction)] _ZTestTransparent("Transparent ZTest", Int) = 4 // Less equal

[ToggleUI] _EnableFogOnTransparent("Enable Fog", Float) = 1.0

[ToggleUI] _EnableBlendModePreserveSpecularLighting("Enable Blend Mode Preserve Specular Lighting", Float) = 1.0

[ToggleUI] _DoubleSidedEnable("Double sided enable", Float) = 0.0

[Enum(Flip, 0, Mirror, 1, None, 2)] _DoubleSidedNormalMode("Double sided normal mode", Float) = 1

[HideInInspector] _DoubleSidedConstants("_DoubleSidedConstants", Vector) = (1, 1, -1, 0)

[Enum(UV0, 0, UV1, 1, UV2, 2, UV3, 3, Planar, 4, Triplanar, 5)] _UVBase("UV Set for base", Float) = 0

_TexWorldScale("Scale to apply on world coordinate", Float) = 1.0

[HideInInspector] _InvTilingScale("Inverse tiling scale = 2 / (abs(_BaseColorMap_ST.x) + abs(_BaseColorMap_ST.y))", Float) = 1

[HideInInspector] _UVMappingMask("_UVMappingMask", Color) = (1, 0, 0, 0)

[Enum(TangentSpace, 0, ObjectSpace, 1)] _NormalMapSpace("NormalMap space", Float) = 0

// Following enum should be material feature flags (i.e bitfield), however due to Gbuffer encoding constrain many combination exclude each other

// so we use this enum as "material ID" which can be interpreted as preset of bitfield of material feature

// The only material feature flag that can be added in all cases is clear coat

[Enum(Subsurface Scattering, 0, Standard, 1, Anisotropy, 2, Iridescence, 3, Specular Color, 4, Translucent, 5)] _MaterialID("MaterialId", Int) = 1 // MaterialId.Standard

[ToggleUI] _TransmissionEnable("_TransmissionEnable", Float) = 1.0

[Enum(None, 0, Vertex displacement, 1, Pixel displacement, 2)] _DisplacementMode("DisplacementMode", Int) = 0

[ToggleUI] _DisplacementLockObjectScale("displacement lock object scale", Float) = 1.0

[ToggleUI] _DisplacementLockTilingScale("displacement lock tiling scale", Float) = 1.0

[ToggleUI] _DepthOffsetEnable("Depth Offset View space", Float) = 0.0

[ToggleUI] _EnableGeometricSpecularAA("EnableGeometricSpecularAA", Float) = 0.0

_SpecularAAScreenSpaceVariance("SpecularAAScreenSpaceVariance", Range(0.0, 1.0)) = 0.1

_SpecularAAThreshold("SpecularAAThreshold", Range(0.0, 1.0)) = 0.2

_PPDMinSamples("Min sample for POM", Range(1.0, 64.0)) = 5

_PPDMaxSamples("Max sample for POM", Range(1.0, 64.0)) = 15

_PPDLodThreshold("Start lod to fade out the POM effect", Range(0.0, 16.0)) = 5

_PPDPrimitiveLength("Primitive length for POM", Float) = 1

_PPDPrimitiveWidth("Primitive width for POM", Float) = 1

[HideInInspector] _InvPrimScale("Inverse primitive scale for non-planar POM", Vector) = (1, 1, 0, 0)

[Enum(UV0, 0, UV1, 1, UV2, 2, UV3, 3)] _UVDetail("UV Set for detail", Float) = 0

[HideInInspector] _UVDetailsMappingMask("_UVDetailsMappingMask", Color) = (1, 0, 0, 0)

[ToggleUI] _LinkDetailsWithBase("LinkDetailsWithBase", Float) = 1.0

[Enum(Use Emissive Color, 0, Use Emissive Mask, 1)] _EmissiveColorMode("Emissive color mode", Float) = 1

[Enum(UV0, 0, UV1, 1, UV2, 2, UV3, 3, Planar, 4, Triplanar, 5)] _UVEmissive("UV Set for emissive", Float) = 0

_TexWorldScaleEmissive("Scale to apply on world coordinate", Float) = 1.0

[HideInInspector] _UVMappingMaskEmissive("_UVMappingMaskEmissive", Color) = (1, 0, 0, 0)

// Caution: C# code in BaseLitUI.cs call LightmapEmissionFlagsProperty() which assume that there is an existing "_EmissionColor"

// value that exist to identify if the GI emission need to be enabled.

// In our case we don't use such a mechanism but need to keep the code quiet. We declare the value and always enable it.

// TODO: Fix the code in legacy unity so we can customize the beahvior for GI

_EmissionColor("Color", Color) = (1, 1, 1)

// HACK: GI Baking system relies on some properties existing in the shader ("_MainTex", "_Cutoff" and "_Color") for opacity handling, so we need to store our version of those parameters in the hard-coded name the GI baking system recognizes.

_MainTex("Albedo", 2D) = "white" {}

_Color("Color", Color) = (1,1,1,1)

_Cutoff("Alpha Cutoff", Range(0.0, 1.0)) = 0.5

[ToggleUI] _SupportDecals("Support Decals", Float) = 1.0

[ToggleUI] _ReceivesSSR("Receives SSR", Float) = 1.0

[HideInInspector] _DiffusionProfile("Obsolete, kept for migration purpose", Int) = 0

[HideInInspector] _DiffusionProfileAsset("Diffusion Profile Asset", Vector) = (0, 0, 0, 0)

[HideInInspector] _DiffusionProfileHash("Diffusion Profile Hash", Float) = 0

_Radius("Radius", Float) = 1.0

}

HLSLINCLUDE

#pragma target 4.5

#pragma only_renderers d3d11 ps4 xboxone vulkan metal switch

//-------------------------------------------------------------------------------------

// Variant

//-------------------------------------------------------------------------------------

#pragma shader_feature_local _ALPHATEST_ON

#pragma shader_feature_local _DEPTHOFFSET_ON

#pragma shader_feature_local _DOUBLESIDED_ON

#pragma shader_feature_local _ _VERTEX_DISPLACEMENT _PIXEL_DISPLACEMENT

#pragma shader_feature_local _VERTEX_DISPLACEMENT_LOCK_OBJECT_SCALE

#pragma shader_feature_local _DISPLACEMENT_LOCK_TILING_SCALE

#pragma shader_feature_local _PIXEL_DISPLACEMENT_LOCK_OBJECT_SCALE

#pragma shader_feature_local _ _REFRACTION_PLANE _REFRACTION_SPHERE

#pragma shader_feature_local _ _EMISSIVE_MAPPING_PLANAR _EMISSIVE_MAPPING_TRIPLANAR

#pragma shader_feature_local _ _MAPPING_PLANAR _MAPPING_TRIPLANAR

#pragma shader_feature_local _NORMALMAP_TANGENT_SPACE

#pragma shader_feature_local _ _REQUIRE_UV2 _REQUIRE_UV3

#pragma shader_feature_local _NORMALMAP

#pragma shader_feature_local _MASKMAP

#pragma shader_feature_local _BENTNORMALMAP

#pragma shader_feature_local _EMISSIVE_COLOR_MAP

#pragma shader_feature_local _ENABLESPECULAROCCLUSION

#pragma shader_feature_local _HEIGHTMAP

#pragma shader_feature_local _TANGENTMAP

#pragma shader_feature_local _ANISOTROPYMAP

#pragma shader_feature_local _DETAIL_MAP

#pragma shader_feature_local _SUBSURFACE_MASK_MAP

#pragma shader_feature_local _THICKNESSMAP

#pragma shader_feature_local _IRIDESCENCE_THICKNESSMAP

#pragma shader_feature_local _SPECULARCOLORMAP

#pragma shader_feature_local _TRANSMITTANCECOLORMAP

#pragma shader_feature_local _DISABLE_DECALS

#pragma shader_feature_local _DISABLE_SSR

#pragma shader_feature_local _ENABLE_GEOMETRIC_SPECULAR_AA

// Keyword for transparent

#pragma shader_feature _SURFACE_TYPE_TRANSPARENT

#pragma shader_feature_local _ _BLENDMODE_ALPHA _BLENDMODE_ADD _BLENDMODE_PRE_MULTIPLY

#pragma shader_feature_local _BLENDMODE_PRESERVE_SPECULAR_LIGHTING

#pragma shader_feature_local _ENABLE_FOG_ON_TRANSPARENT

#pragma shader_feature_local _TRANSPARENT_WRITES_MOTION_VEC

// MaterialFeature are used as shader feature to allow compiler to optimize properly

#pragma shader_feature_local _MATERIAL_FEATURE_SUBSURFACE_SCATTERING

#pragma shader_feature_local _MATERIAL_FEATURE_TRANSMISSION

#pragma shader_feature_local _MATERIAL_FEATURE_ANISOTROPY

#pragma shader_feature_local _MATERIAL_FEATURE_CLEAR_COAT

#pragma shader_feature_local _MATERIAL_FEATURE_IRIDESCENCE

#pragma shader_feature_local _MATERIAL_FEATURE_SPECULAR_COLOR

// enable dithering LOD crossfade

#pragma multi_compile _ LOD_FADE_CROSSFADE

//enable GPU instancing support

#pragma multi_compile_instancing

#pragma instancing_options renderinglayer

//-------------------------------------------------------------------------------------

// Define

//-------------------------------------------------------------------------------------

// This shader support vertex modification

#define HAVE_VERTEX_MODIFICATION

// If we use subsurface scattering, enable output split lighting (for forward pass)

#if defined(_MATERIAL_FEATURE_SUBSURFACE_SCATTERING) && !defined(_SURFACE_TYPE_TRANSPARENT)

#define OUTPUT_SPLIT_LIGHTING

#endif

#if defined(_TRANSPARENT_WRITES_MOTION_VEC) && defined(_SURFACE_TYPE_TRANSPARENT)

#define _WRITE_TRANSPARENT_MOTION_VECTOR

#endif

//-------------------------------------------------------------------------------------

// Include

//-------------------------------------------------------------------------------------

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Common.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/ShaderLibrary/ShaderVariables.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/RenderPipeline/ShaderPass/FragInputs.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/RenderPipeline/ShaderPass/ShaderPass.cs.hlsl"

//-------------------------------------------------------------------------------------

// variable declaration

//-------------------------------------------------------------------------------------

// #include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/Lit.cs.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/LitProperties.hlsl"

// TODO:

// Currently, Lit.hlsl and LitData.hlsl are included for every pass. Split Lit.hlsl in two:

// LitData.hlsl and LitShading.hlsl (merge into the existing LitData.hlsl).

// LitData.hlsl should be responsible for preparing shading parameters.

// LitShading.hlsl implements the light loop API.

// LitData.hlsl is included here, LitShading.hlsl is included below for shading passes only.

ENDHLSL

SubShader

{

// This tags allow to use the shader replacement features

Tags{ "RenderPipeline"="HDRenderPipeline" "RenderType" = "HDLitShader" }

Pass

{

Name "SceneSelectionPass"

Tags { "LightMode" = "SceneSelectionPass" }

Cull Off

HLSLPROGRAM

// Note: Require _ObjectId and _PassValue variables

// We reuse depth prepass for the scene selection, allow to handle alpha correctly as well as tessellation and vertex animation

#define SHADERPASS SHADERPASS_DEPTH_ONLY

#define SCENESELECTIONPASS // This will drive the output of the scene selection shader

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Material.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/Lit.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/ShaderPass/LitDepthPass.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/LitData.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/RenderPipeline/ShaderPass/ShaderPassDepthOnly.hlsl"

#pragma vertex Vert

#pragma fragment Frag

#pragma editor_sync_compilation

ENDHLSL

}

// Extracts information for lightmapping, GI (emission, albedo, ...)

// This pass it not used during regular rendering.

Pass

{

Name "META"

Tags{ "LightMode" = "META" }

Cull Off

HLSLPROGRAM

// Lightmap memo

// DYNAMICLIGHTMAP_ON is used when we have an "enlighten lightmap" ie a lightmap updated at runtime by enlighten.This lightmap contain indirect lighting from realtime lights and realtime emissive material.Offline baked lighting(from baked material / light,

// both direct and indirect lighting) will hand up in the "regular" lightmap->LIGHTMAP_ON.

#define SHADERPASS SHADERPASS_LIGHT_TRANSPORT

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Material.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/Lit.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/ShaderPass/LitSharePass.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/LitData.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/RenderPipeline/ShaderPass/ShaderPassLightTransport.hlsl"

#pragma vertex Vert

#pragma fragment Frag

ENDHLSL

}

Pass

{

Name "ShadowCaster"

Tags{ "LightMode" = "ShadowCaster" }

Cull[_CullMode]

ZClip [_ZClip]

ZWrite On

ZTest LEqual

ColorMask 0

HLSLPROGRAM

#define SHADERPASS SHADERPASS_SHADOWS

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Material.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/Lit.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/ShaderPass/LitDepthPass.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/LitData.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/RenderPipeline/ShaderPass/ShaderPassDepthOnly.hlsl"

#pragma vertex Vert

#pragma fragment Frag

ENDHLSL

}

Pass

{

Name "MyDepthPass"

Tags { "lightMode" = "MyDepthPass" }

Cull Back

ZTest On

ZWrite On

Stencil

{

WriteMask 1

Ref 1

Comp Always

Pass Replace

}

HLSLPROGRAM

#pragma multi_compile _ DEBUG_DISPLAY

#pragma multi_compile _ LIGHTMAP_ON

#pragma multi_compile _ DIRLIGHTMAP_COMBINED

#pragma multi_compile _ DYNAMICLIGHTMAP_ON

#pragma multi_compile _ SHADOWS_SHADOWMASK

// Setup DECALS_OFF so the shader stripper can remove variants

#pragma multi_compile DECALS_OFF DECALS_3RT DECALS_4RT

#pragma multi_compile _ LIGHT_LAYERS

#define SHADERPASS SHADERPASS_GBUFFER

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Material.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/Lit.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/ShaderPass/LitSharePass.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/LitData.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/RenderPipeline/ShaderPass/VertMesh.hlsl"

PackedVaryingsType Vert(AttributesMesh inputMesh)

{

VaryingsType varyingsType;

varyingsType.vmesh = VertMesh(inputMesh);

return PackVaryingsType(varyingsType);

}

#ifdef TESSELLATION_ON

PackedVaryingsToPS VertTesselation(VaryingsToDS input)

{

VaryingsToPS output;

output.vmesh = VertMeshTesselation(input.vmesh);

return PackVaryingsToPS(output);

}

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/RenderPipeline/ShaderPass/TessellationShare.hlsl"

#endif // TESSELLATION_ON

uniform float _Radius;

void Frag( PackedVaryingsToPS packedInput,

out float output: SV_Target,

out float outputDepth : SV_Depth

)

{

UNITY_SETUP_STEREO_EYE_INDEX_POST_VERTEX(packedInput);

FragInputs input = UnpackVaryingsMeshToFragInputs(packedInput.vmesh);

float3 normal;

normal.xy = input.texCoord0.xy *2 - 1;

float r2 = dot(normal.xy, normal.xy);

if (r2 > 1.0) discard;

normal.z = sqrt(1.0 - r2);

float4 pixelPos = mul(UNITY_MATRIX_V, float4(input.positionRWS.xyz, 1)) + float4(0, 0, normal.z * _Radius, 0);

float4 clipSpacePos = mul(UNITY_MATRIX_P, pixelPos);

output = Linear01Depth(clipSpacePos.z / clipSpacePos.w, _ZBufferParams);

outputDepth = clipSpacePos.z / clipSpacePos.w;

}

#pragma vertex Vert

#pragma fragment Frag

ENDHLSL

}

Pass

{

Name "MyXBlurPass"

Tags { "lightMode" = "MyXBlurPass" }

Cull Off

ZTest Always

ZWrite Off

Stencil

{

ReadMask 1

Ref 1

Comp Equal

}

HLSLPROGRAM

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Common.hlsl"

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Color.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/ShaderLibrary/ShaderVariables.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/PostProcessing/Shaders/FXAA.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/PostProcessing/Shaders/RTUpscale.hlsl"

struct Attributes

{

uint vertexID : SV_VertexID;

UNITY_VERTEX_INPUT_INSTANCE_ID

};

struct Varyings

{

float4 positionCS : SV_POSITION;

float2 texcoord : TEXCOORD0;

UNITY_VERTEX_OUTPUT_STEREO

};

float4 _UVTransform;

uniform sampler2D _Depth0RT;

Varyings Vert(Attributes input)

{

Varyings output;

UNITY_SETUP_INSTANCE_ID(input);

UNITY_INITIALIZE_VERTEX_OUTPUT_STEREO(output);

output.positionCS = GetFullScreenTriangleVertexPosition(input.vertexID);

output.texcoord = GetFullScreenTriangleTexCoord(input.vertexID);

return output;

}

void Frag(Varyings input, out float output: SV_Target)

{

UNITY_SETUP_STEREO_EYE_INDEX_POST_VERTEX(input);

float2 uv = input.texcoord.xy;

uv = ClampAndScaleUVForPoint(uv);

float depth = tex2D(_Depth0RT, uv).r;

float radius = 50;

float sum = 0;

float wsum = 0;

for (float x = -radius; x <= radius; x += 1) {

float sample = tex2D(_Depth0RT, uv + float2(x / _ScreenParams.x, 0)).x;

float r = x * 0.12;

float w = exp(-r * r);

float r2 = (sample - depth) * 100;

float g = exp(-r2*r2);

sum += sample * w * g;

wsum += w * g;

}

if (wsum > 0) {

sum /= wsum;

}

output = sum;

}

#pragma vertex Vert

#pragma fragment Frag

ENDHLSL

}

Pass

{

Name "MyYBlurPass"

Tags { "lightMode" = "MyYBlurPass" }

Cull Off

ZTest Always

ZWrite Off

Stencil

{

ReadMask 1

Ref 1

Comp Equal

}

HLSLPROGRAM

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Common.hlsl"

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Color.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/ShaderLibrary/ShaderVariables.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/PostProcessing/Shaders/FXAA.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/PostProcessing/Shaders/RTUpscale.hlsl"

struct Attributes

{

uint vertexID : SV_VertexID;

UNITY_VERTEX_INPUT_INSTANCE_ID

};

struct Varyings

{

float4 positionCS : SV_POSITION;

float2 texcoord : TEXCOORD0;

UNITY_VERTEX_OUTPUT_STEREO

};

uniform sampler2D _Depth1RT;

Varyings Vert(Attributes input)

{

Varyings output;

UNITY_SETUP_INSTANCE_ID(input);

UNITY_INITIALIZE_VERTEX_OUTPUT_STEREO(output);

output.positionCS = GetFullScreenTriangleVertexPosition(input.vertexID);

output.texcoord = GetFullScreenTriangleTexCoord(input.vertexID);

return output;

}

void Frag(Varyings input, out float output: SV_Target)

{

UNITY_SETUP_STEREO_EYE_INDEX_POST_VERTEX(input);

float2 uv = input.texcoord.xy;

uv = ClampAndScaleUVForPoint(uv);

float depth = tex2D(_Depth1RT, uv).r;

float radius = 50;

float sum = 0;

float wsum = 0;

for (float x = -radius; x <= radius; x += 1) {

float sample = tex2D(_Depth1RT, uv + float2(0, x/ _ScreenParams.y)).x;

float r = x * 0.12;

float w = exp(-r * r);

float r2 = (sample - depth) * 100;

float g = exp(-r2*r2);

sum += sample * w * g;

wsum += w * g;

}

if (wsum > 0) {

sum /= wsum;

}

output = sum;

}

#pragma vertex Vert

#pragma fragment Frag

ENDHLSL

}

Pass {

Name "MyGBufferPass"

Tags { "lightMode" = "MyGBufferPass" }

Cull Off

ZTest Always

ZWrite On

Stencil

{

ReadMask 1

Ref 1

Comp Equal

// Comp Always

}

HLSLPROGRAM

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Common.hlsl"

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Color.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/ShaderLibrary/ShaderVariables.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/PostProcessing/Shaders/FXAA.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/PostProcessing/Shaders/RTUpscale.hlsl"

#define SHADERPASS SHADERPASS_GBUFFER

#ifdef DEBUG_DISPLAY

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Debug/DebugDisplay.hlsl"

#endif

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Material.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/Lit.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/ShaderPass/LitSharePass.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/Material/Lit/LitData.hlsl"

#include "Packages/com.unity.render-pipelines.high-definition/Runtime/RenderPipeline/ShaderPass/VertMesh.hlsl"

uniform sampler2D _Depth0RT;

uniform float4 _FrustumCorner;

VaryingsMeshType MyVertMesh(AttributesMesh input, uint vertexID) {

VaryingsMeshType output = (VaryingsMeshType)0;

UNITY_SETUP_INSTANCE_ID(input);

UNITY_TRANSFER_INSTANCE_ID(input, output);

#ifdef ATTRIBUTES_NEED_NORMAL

float3 normalWS = TransformObjectToWorldNormal(input.normalOS);

#else

float3 normalWS = float3(0.0, 0.0, 0.0); // We need this case to be able to compile ApplyVertexModification that doesn't use normal.

#endif

output.positionCS = GetFullScreenTriangleVertexPosition(vertexID);

output.texCoord0 = GetFullScreenTriangleTexCoord(vertexID);

return output;

}

PackedVaryingsType Vert(AttributesMesh inputMesh, uint vertexID: SV_VertexID)

{

VaryingsType varyingsType;

// varyingsType.vmesh = VertMesh(inputMesh);

varyingsType.vmesh = MyVertMesh(inputMesh, vertexID);

return PackVaryingsType(varyingsType);

}

float3 uvToEyeSpacePos(float2 uv, sampler2D depth)

{

float d = tex2D(depth, ClampAndScaleUVForPoint(uv)).x;

float3 frustumRay = float3(

lerp(_FrustumCorner.x, _FrustumCorner.y, uv.x),

lerp(_FrustumCorner.z, _FrustumCorner.w, uv.y),

-_ProjectionParams.z

);

return frustumRay * d;

}

#define _DEPTHOFFSET_ON

void Frag(PackedVaryingsType packedInput,

OUTPUT_GBUFFER(outGBuffer)

// #ifdef _DEPTHOFFSET_ON

, out float outputDepth : SV_Depth

// #endif

)

{

UNITY_SETUP_STEREO_EYE_INDEX_POST_VERTEX(packedInput);

FragInputs input = UnpackVaryingsMeshToFragInputs(packedInput.vmesh);

float2 uv = input.texCoord0.xy;

float3 eyeSpacePos = uvToEyeSpacePos(uv, _Depth0RT);

float4 clipSpacePos = mul(UNITY_MATRIX_P, float4(eyeSpacePos, 1));

outputDepth = clipSpacePos.z / clipSpacePos.w;

float3 ddx = uvToEyeSpacePos(uv + float2(1 / _ScreenParams.x, 0), _Depth0RT) - eyeSpacePos;

float3 ddx2 = eyeSpacePos - uvToEyeSpacePos(uv - float2(1 / _ScreenParams.x, 0), _Depth0RT);

if (abs(ddx.z) > abs(ddx2.z)) {

ddx = ddx2;

}

float3 ddy = uvToEyeSpacePos(uv + float2(0, 1 / _ScreenParams.y), _Depth0RT) - eyeSpacePos;

float3 ddy2 = eyeSpacePos - uvToEyeSpacePos(uv - float2(0, 1 / _ScreenParams.y), _Depth0RT);

if (abs(ddy2.z) < abs(ddy.z)) {

ddy = ddy2;

}

float3 normal = cross(ddx, ddy);

normal = normalize(normal);

float4 worldSpacewNormal = mul(

transpose(UNITY_MATRIX_V),

float4(normal, 0)

);

// input.positionSS is SV_Position

PositionInputs posInput = GetPositionInput(input.positionSS.xy, _ScreenSize.zw, input.positionSS.z, input.positionSS.w, input.positionRWS);

#ifdef VARYINGS_NEED_POSITION_WS